Bilateral AI - Research Modules

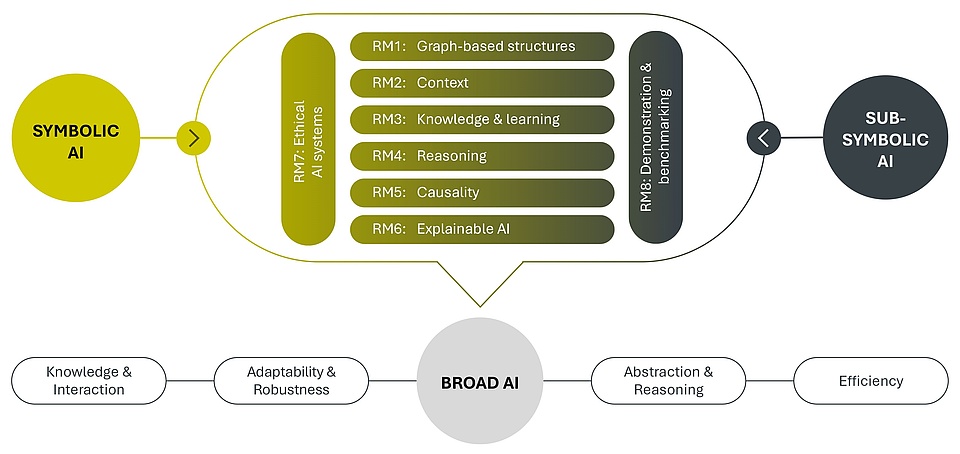

Bilateral AI aims at developing the foundation for broad AI systems with interacting sub-symbolic and symbolic components as depicted in the following Figure giving an overview of the research agenda of the BILAI Cluster of Excellence:

Eight interconnected Research Modules (RMs) will address the following topics:

- Graph-based structures (RM1)

- Context (RM2)

- Knowledge & Learning (RM3)

- Reasoning (RM4)

- Causality (RM5)

- Explainable AI (RM6)

- Ethical AI Systems (RM7)

- Demonstration and Benchmarking (RM8)

Graph-based structures (RM1)

Graph-based structures are highly relevant to all the essential properties of a broad AI. Graph-based structures are inherently symbolic and often equipped with sub-symbolic attributes such as costs or interaction strength. They are omnipresent when solving complex tasks, and can appear as navigation maps, as social or physical interaction networks, or as object relations. Graphs are ideal to transfer knowledge: their nodes and edges represent learned or known abstractions of real-world entities; their structure is typically very robust; they can be readily adapted to new situations or even constructed on the fly; they allow for advanced reasoning; and they allow employing efficient algorithms from computer science. Because of the inherent symbolic nature and their suitability for learning and sub-symbolic elements, they naturally constitute a promising starting point as a core component for a bilateral AI approach.

Context (RM2)

Context is typically used by humans for solving complex tasks using associations with recent or past experiences. New situations can be associated with stored ones to provide a context, which makes humans very robust against domain shifts and allows them to adapt quickly. As only the abstraction of a situation is stored to give a context, memory- and context-based processing is highly suited for knowledge transfer. We will investigate novel approaches to combine symbolic and sub-symbolic representations of context and memory in bilateral AI systems, inspired by the memory system of the brain. Our aim is to improve AI systems with respect to robustness, adaptivity, abstraction, and knowledge transfer using bilateral AI approaches.

Knowledge and Learning (RM3)

This research module focuses on the integration of efficient computational logic, symbolic reasoning, and expert knowledge into machine learning. It deals with the use of efficient methods from computational logic for optimizing symbolic machine learning models, as well as on integrating learned knowledge into reasoning modules. In combining machine learning with symbolic AI, these approaches will build a foundation of our bilateral vision.

Reasoning (RM4)

This research module aims at enhancing symbolic reasoners as needed for solving tasks requiring exact solutions. Examples of such tasks include the formal verification of the correctness of software systems, the automatic solving of planning problems and games, and the correct configuration of complex systems. Synergies with subsymbolic approaches will be exploited on the hand to generate efficient symbolic encodings of application problems in a user-friendly manner. On the other hand, subsymbolic techniques will be employed to deal with the inherently large search space symbolic reasoners are confronted with.

Causality (RM5)

This research module aims at revealing and utilizing valid causal mechanisms underlying the data via efficient causal learning and inference methods based on hybrid, semi-symbolic representations that implement symbolic computations. Causal learning is relevant for advanced reasoning, can adapt well to new situations when causes remain the same, and helps knowledge transfer. Causality is used both in symbolic and sub-symbolic techniques in our bilateral AI approach.

Explainable AI (RM6)

Explainable AI aims to exploit symbolic techniques in order to make deep neural networks more interpretable and explainable. This includes aligning neural networks with symbolic theories, as well as integrating symbolic reasoning into deep learning models. We also aim at improving the generation of natural language explanations and develop evaluation techniques for explainable AI. Explainable AI is a prerequisite for interactions of AI systems with humans and for knowledge transfer from AI to humans and vice versa.

Ethical AI Systems (RM7)

Ensuring AI systems are ethical and responsible is vital in today's digital society, and a key focus across all research modules. In RM7 we explore practical ways to apply policies and ethical principles - such as privacy, fairness, and non-maleficence / beneficence. Additionally, various transparency, compliance, and governance mechanisms are used to ensure that Broad AI can operate in a legally sound, ethically sensitive, and socially acceptable way.

Demonstration and Benchmarking (RM8)

In this cross-cutting research module we provide showcases for the various bilateral methods developed in the other research modules and develop new concrete research questions and challenges to be fed back to them.