About the project

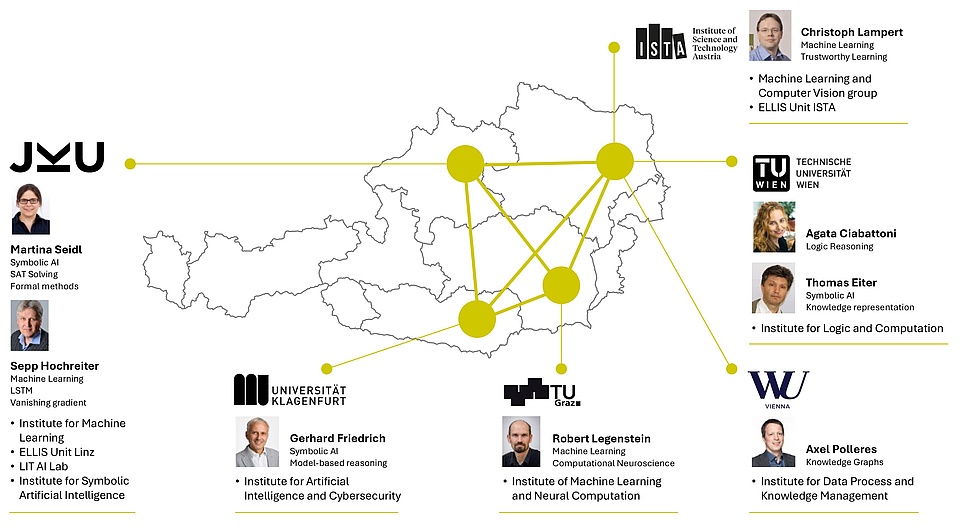

The project “Bilateral AI” aims at lifting artificial intelligence (AI) to the next level. Current AI systems are in a sense narrow. They center on a specific application or task such as object or speech recognition. Our project will combine two of the most important types of AI which have been developed separately so far: symbolic and sub-symbolic AI. While symbolic AI works with clearly defined logical rules, sub-symbolic AI (such as ChatGPT) is based on training a machine with the help of large datasets to create intelligent behavior. This integration, resulting in a Broad AI, is intended to mirror

something that humans do naturally: the simultaneous use of cognition and reasoning skills.

Bilateral AI is proud to support the next generation of AI thinkers!

We’re excited to be a partner of the VCLA student video contest “Mensch und Maschine im Jahr 2035”, encouraging young minds to explore how AI will shape our future. Austrian Students from fifth grade up are invited to share their vision of life in 2035 in a short video. (Article in German)

Read more

Two types of Artificial Intelligence are being united

For many years, there were two technically very different approaches in the field of artificial intelligence. Now, in the Excellence Cluster "Bilateral AI", they are being combined. TU Wien published an interesting article exploring this very question. (Article in German)

Read more

Autonomous AI-Enabled Robots need Ethical Guardrails with Guarantees

On March 11, 2025, the Center for AI and Machine Learning (CAIML) Colloquium with Matthias Scheutz from the Tufts University, US took place at TU Wien. The event was co-organized by CAIML and BILAI.

Read more